What is a Computer?

Table of Contents

A computer is a machine (mainly electronic) that can take information (input) and process it to generate new information (output). Calculating machines have been around for much of human history. A computer is a programmable machine. The two main characteristics of a computer are: it responds to a specific set of instructions in a well-defined way, and it can execute a list of prerecorded instructions (a program).

Modern computers are very different from the first computers. They can do billions of calculations per second. Most people have used a personal computer at home or at work. Computers do many different jobs where automation comes in handy. Some examples are traffic light control, vehicle computers, security systems, washing machines, and digital televisions.

History of Computers

Computers truly became huge investments in the last two decades of the 20th century. But its history dates back more than 2,500 years to the abacus: a simple calculator made of beads and strings, which is still used in some parts of the world today. The difference between an ancient abacus and a modern computer seems to be vast, but the principle – doing repetitive calculations faster than the human brain – is exactly the same.

In 1833, a man named Charles Babbage invented all the parts that are now used in a modern computer. But it was only 100 years ago that the first “modern” computers were invented.

The First Computer in the World: Discover the History of Computer Hardware for Kids

Konrad Zuse was the inventor of the world’s first computer in 1936 and named it Z1. In 1939, he created the Z2, the world’s first electro-mechanical computer.

The first computers were built around 1940. They were the size of a large room and used lots of electricity. Can you imagine having a computer the size of a large room? How would you sit in front of it?

The computers we know today only began to be manufactured in 1980.

In 1980, the world’s first gigabyte hard drive was released. It cost a massive $40,000 and weighed 550 pounds (almost 227kgs.). How could they move it?

Computers the Original Devices: History The original devices

Humanity has used devices to assist in computing for millennia; an example is the abacus. The first machines that were able to come up with the solution of an arithmetic question began to appear in the 1600s. They were limited to addition and subtraction at first, but later they were also able to carry out multiplication. These devices used technology such as gears and cogs that were first developed for watches. The machines of the 1800s could perform a long sequence of such calculations to build mathematical tables, but they were not in common use.

The defining characteristic of a ‘universal computer’ is programmability, which allows the computer to compute by changing a sequence of stored instructions. In 1801, Joseph-Marie Jacquard developed a loom in which the pattern to be woven was controlled by punched cards. The series of cards could be changed without changing the mechanical design of the loom. This was a crucial point in programmability.

History of Computers Timeline

In 1835 Babbage described his analytical engine. It was the blueprint for a general-purpose programmable computer, employing punched cards as input and the power of a steam engine. Although the plans were correct, the lack of precision of the mechanical parts, the disputes with the craftsmen who made the parts, and the termination of government contributions made it impossible to build them. Ada Lovelace, Lord Byron’s daughter, wrote the best surviving report of programming or the analytical engine, and she seems to have actually developed some programs for it. These efforts of hers make her the world’s first computer programmer. The original Differential Engine has been built and is operational at the London Science Museum – it works exactly how it was designed by Babbage.

In 1890, the United States Census Bureau used punch card machines and sorting machines designed by Herman Hollerith to manipulate the flow of data from the decennial censuses mandated by the Constitution. Hollerith’s company eventually became the start of IBM.

In the 20th century, electricity was first used for punch card machines and sorting machines. The first mechanical calculators, cash registers, accounting machines, and others were redesigned to use electric motors. Before World War II, mechanical and electrical analog computers were the state of the art; these were thought to be the future of computing.

Evolution of Computers

Analog computers use continuously varying amounts of physical quantities, such as voltages and currents, or the rotational speed of poles, to represent the quantities that are being processed. An ingenious example of such a type of machine was the Water integrator built in 1936. Unlike modern digital computers, analog computers are not very flexible, and need to be reconfigured (reprogrammed) manually to switch them to work from one problem to another. Analog computers had an advantage over early digital computers in that they could be used to solve complex problems while earlier attempts at digital computers were very limited. But as digital computers have gotten faster and use larger memories (for example, RAM or internal storage), they have almost completely displaced analog computers.

Abacus and Adding Machines

The first computers did not have electrical circuits, monitors, or memories. The abacus, an ancient Chinese adding machine, is one of the original computing machines, used as early as 400 BC. It can’t do many of the calculations that a modern electronic calculator can do, but in the right hands it can make calculations of large sums as easy as moving bills around. Famous mathematicians like Leonardo da Vinci and Blaise Pascal invented more sophisticated calculators using gears and punch cards.

Computer: Vacuum Tube

The invention of the vacuum tube in 1904 launched a revolution in computers. A vacuum tube is a tube from which all the air and gas have been removed, making it perfect for controlling electrical circuits. By using vacuum tubes in conjunction with hundreds or thousands of electrical circuits, a computer’s vacuum tube can perform calculations by turning these circuits on (current flow) or off (no current flow). Computers before 1950 frequently had vacuum tubes in their processors.

The Transistor and the Microprocessor

Developed by Bell Labs in 1947, transistors are made of metal (usually silicone) that, like vacuum tubes) can turn circuits on or off. Today’s technology makes it possible to build transistors as small as a molecule. This allows computer manufacturers to make microprocessors (the brain of the computer) small enough to fit in the palm of your hand, but still capable of carrying out trillions of calculations in a single second.

Birth of the Internet: Computer Networks

The most recent stepping stone in the history of computers has been the birth of the Internet and other networks. In 1973, Bob Kahn and Vint Cerf developed the basic idea of the Internet, a form of communication between different computers through packets of data. Tim Berners-Lee developed the World Wide Web, a network of Web servers, in 1991. A year later, the number of hosts (computers connected to the Internet) exceeded one million.

The User Revolution

Fortunately for Apple, it had another great idea. One of the strongest qualities of the Apple II was being “user friendly.” For Steve Jobs, developing truly user-friendly computers became a personal mission in the early 1980s. What really inspired him was a visit to PARC (Palo Alto Research Center), an innovative computer lab later transformed into a division of the Xerox Corporation. Xerox had begun developing computers in the early 1970s, believing that they could make paper obsolete (and the highly lucrative Xerox copiers did).

One of PARC’s research projects was a $40,000 advanced computer called the Xerox Alto. Unlike most microcomputers released after 1970, which were programmed by entering text commands, the Alto had a desktop-like screen with small image icons that could be moved with a mouse: this was the first graphical user interface. (GUI, pronounced ‘gooey’) – an idea conceived by Alan Kay (1940-) and now used on virtually every modern computer. The Alto borrowed some of his ideas, including the mouse, from Douglas Engelbart’s pioneering computer of 1960 (1925-2013).

Truly User-friendly Computers

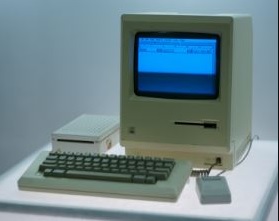

Back at Apple, Jobs launched his own version of Project Alto to develop an easy-to-use computer called PITS (Person in the Street). This machine became the Apple Lisa, released in January 1983 – the first widely available computer with a GUI desktop. With a retail price of $ 10,000, three times the cost of an IBM personal computer (PC), the Lisa was a commercial failure. But it paved the way for a better and cheaper machine called the Macintosh that Jobs revealed a year later, in January 1984. With his memorable launch blurb for the Macintosh inspired by George Orwell’s 1984 novel and directed by Ridley Scott (director of the dystopian film Blade Runner), Apple hit IBM’s monopoly hard, criticizing what it described as the firm’s dominant – even totalitarian – approach: Big Blue was really Big Brother. Apple’s blurb promised a different view: “On January 24, The Apple Computer will introduce the Macintosh. And you will see why 1984 will not be like ‘1984’”. Referring to George Orwell’s novel). The Macintosh was a critical success and helped invent the new field of desktop publishing in the mid-1980s, and yet it never came close to IBM’s challenging position.

Ironically, Jobs’s easy-to-use machine also helped remove IBM as a world leader in computing. When Bill Gates saw how the Macintosh worked, with its desktop and easy-to-use icons, he released Windows, an updated version of his MS-DOS software. Apple viewed this as blatant plagiarism and sued $ 5.5 billion in copyright in 1988. Four years later, the case ended with Microsoft effectively securing the right to use the “look and feel” of the the Macintosh in all current and future versions. Microsoft’s Windows 95 system, released three years later, had a desktop similar to the easy-to-use Macintosh and MS-DOS running behind the scenes.

Facts About Computers

• A computer is a machine that takes input from the person and produces an output. So you can give the computer a command and it will follow it to produce a result.

• Computers have a microprocessor that can do calculations instantly. Many things have microprocessors, such as cars, a washing machine, a dishwasher, and even your television.

• Computers have RAM, which stores information on the computer when it is not being used. The hard drive stores everything your computer needs to run.

• As the computer runs, they get hot. Computers have fans to keep them cool.

Computer Vocabulary

• Abacus: counting device made of beads and strings.

• Modern: current, up to date.

• Command: order.

• Calculations: mathematical equations.

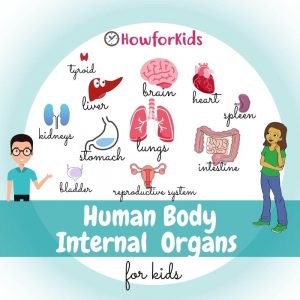

Read also: Human Body Systems and Internal Organs Easy for kids